- Generative AI Enterprise

- Posts

- 40 must-read AI enterprise case studies

40 must-read AI enterprise case studies

Plus, key takeaways to help you level up fast.

WELCOME, EXECUTIVES AND PROFESSIONALS.

Thousands of enterprise AI case studies have emerged over the past year, spanning AI agents, agentic AI, and generative AI.

Having reviewed 3,427, many lack measurable outcomes, technical depth and innovative approaches.

But these 40 go beyond the hype:

MCKINSEY

Image source: Quantum Black, AI by Mckinsey

Brief: QuantumBlack, McKinsey's AI-focused capability, shared how its leveraging MCP, including integration into Brix, its proprietary gen AI asset marketplace, to enable seamless access and systematic reuse.

Breakdown:

Brix lets teams publish MCP servers with tools, prompts, configs, and docs, creating reusable assets others can easily discover and reuse.

QuantumBlack has 10+ MCPs in development, supporting 115+ reusable assets. MCPs now rival data pipelines/connectors as top assets on Brix.

With 1,500+ staff across 40+ sites, reuse has been key to consistent and fast delivery of 15,000+ AI projects. MCPs now power the next S-curve.

Brix is a mesh-compatible “AI Asset Registry” (see image above), built to deploy alongside broader agentic AI capabilities.

Embedding Brix MCP into GitHub Copilot let engineers reuse assets from their IDE, reducing discovery time by 55%.

Why it’s important: AI presents vast opportunities and challenges, for instance, in interoperability, reusability, and scalability. Reusability goes beyond engineering best practices; it’s a key enabler of scale. With effective MCP reuse, enterprises can accelerate delivery and reduce maintenance overhead.

ANTHROPIC

Image source: Anthropic

Brief: Anthropic shared lessons from taking Claude’s multi-agent research capabilities from prototype to production, outlining proven principles others can apply when building and deploying multi-agent systems.

Breakdown:

Research is dynamic and nonlinear; AI agents excel in this setting by adapting to new information and following evolving lines of inquiry.

Anthropic’s Research feature plans based on user input, then uses tools to create parallel agents that search for information simultaneously.

The company encoded expert human research strategies into prompts, like task decomposition and source quality evaluation.

Effective agent evaluation starts with small samples, scales with LLM-as-judge, and relies on human review to catch what automation misses.

Anthropic addresses production reliability and engineering challenges such as the stateful nature of agents, compounding errors, and more.

Why it’s important: Anthropic's experience demonstrates how multi-agent research systems can reliably scale through careful engineering, extensive testing, precise prompt tool design, and close collaboration among research, product, and engineering teams with deep AI agent knowledge.

OPENAI

Image source: OpenAI

Brief: OpenAI published new guidance on how to design and implement a multi-agent system with best practices using its Agents SDK and a real-world example of an investment research task.

Breakdown:

Specialist agents (Macro, Quant, Fundamental) collaborate under a Portfolio Manager agent to tackle complex investment research questions.

Uses an "agents as tools" approach, the central agent calls other agents as if they were tools to handle specific subtasks in generating answers .

For instance, a user query “How would an interest rate cut affect GOOGL?” routes to the manager agent, which delegates to specialist agents.

Each specialist agent leverages tools such as custom Python functions, Code Interpreter, WebSearch, and external MCP servers.

OpenAI shares design best practices to improve research quality, speed up results, and make systems easier to extend and maintain.

Why it’s important: This example shows how to combine agent specialization, parallel execution, and orchestration using the OpenAI Agents SDK, offering a clear blueprint for building effective multi-agent workflows for research, analysis, or other complex tasks requiring expert collaboration.

OPENAI

Image source: OpenAI

Brief: OpenAI revealed how Intercom built an AI customer service platform by staying rigorous in evaluation, grounded in performance, and flexible in design, offering lessons for other enterprises building with LLMs.

Breakdown:

Intercom built Fin, an AI agent that now resolves millions of customer queries monthly and ships new capabilities in days, not quarters.

Experiment early and often. GPT-4.1 outperformed GPT‑4o (reasoning) for refunds, delivering more reliable, cheaper, and faster outcomes.

Measure what works, and why. Fin is benchmarked on real chats to assess brand tone and functionality, then validated with live A/B testing.

Fin’s flexible architecture supports multimodal inputs, routes complex queries, and enables model switching without reengineering.

Intercom is expanding beyond customer support, bringing faster resolutions and better experiences across support, ops and product teams.

Why it’s important: Intercom's leadership acted decisively: redirecting resources from non-AI work, forming a cross-functional task force, and investing $100M to replatform the business. Their experience offers practical insights, especially for companies aiming to transform customer experience.

ERICSSON

Image source: Ericsson

Brief: Ericsson shared how it is leveraging agentic AI to automate and accelerate the development and deployment of AI applications, both internally and for clients, across telecom operations and service delivery.

Breakdown:

Ericsson’s Telco Agentic AI Studio automates the creation of agentic applications for operations and business support systems.

A set of foundational “worker agents” act as orchestrators, coordinating specialized generative AI agents to perform telecom-specific tasks.

These LLM-driven systems possess deep telecom expertise, designed to build, test, package, and deploy domain-specific AI applications.

The Telco Agentic AI Studio is structured around an intent, multi-agent and knowledge layer, enabling agentic AI at scale.

It’s transforming how telecom services are sold and delivered, supporting use cases from customer personalization to network optimization.

Why it’s important: The rapid evolution of LLMs into agents, and the growing demand for automation, is accelerating the rise of agentic AI in telecom and beyond. Enterprises like Ericsson are building accelerators to enable outcome-driven AI and fuel continuous innovation.

META

Image source: Meta

Brief: Meta shared how Aitomatic, which transforms industrial expertise into AI agents, built a Llama-powered Domain-Expert Agent (DXA) to provide expert guidance to field engineers at an integrated circuit (IC) manufacturer.

Breakdown:

The IC producer faced support issues as field engineers struggled to access specialized knowledge, causing delays and inconsistent service.

Aitomatic built a Llama 3.1 70B-powered DXA to capture and scale expert knowledge. Llama was chosen for its customizability and versatility.

DXA development involved capturing expert knowledge, augmenting with synthetic data to expand scenario coverage, and deployment.

The firm anticipates 3x faster issue resolution and a 75% first-attempt success rate, up from 15–20%, with its newly deployed DXA.

With the DXA's efficacy, Aitomatic aims to enable the development of further DXAs, potentially automating the IC design process itself.

Why it’s important: Field engineers now handle customer inquiries with greater speed and independence from senior staff. Using open-source Llama, the IC design firm retains full ownership of its DXA, trained on sensitive, company-specific knowledge, eliminating dependency on proprietary AI models.

MCKINSEY

Image source: McKinsey & Company

Brief: Deutsche Telekom partnered with McKinsey to develop a gen AI-powered learning and coaching engine, helping to upskill 8,000 human agents in the field and call centers to better meet customer needs.

Breakdown:

Deutsche Telekom saw that traditional learning programs were resulting in substantial variation in performance across agents.

They sought to shift from reliance on individual coaching to an AI engine that would power hyper-personalized learning at scale.

The team spent six weeks diagnosing agent needs with millions of data points, then four months building, testing, and refining the MVP solution.

It’s built into agent workflows. For example, if an agent struggles with eSIM activation, they’re prompted to watch a quick training video.

Operational efficiency has improved, and the likelihood of customers recommending the company has increased by 14%.

Why it’s important: Deutsche Telekom demonstrates how enterprises can quickly evolve to deliver scalable, efficient outcomes with AI. Deutsche Telekom SVP Peter Meier van Esch said, “The impact of this work has been profound,” with employees now better equipped to serve customers.

OPENAI

Image source: OpenAI

Brief: OpenAI published a case study on its work with Endex, a company developing a financial analyst AI agent. By integrating OpenAI’s reasoning models, Endex is achieving enhanced performance in tasks requiring structured thinking and deep analysis.

Breakdown:

Endex previously used complex prompts, chained completions, and verification steps. With OpenAI o1, it's now simpler without sacrificing accuracy.

With OpenAI o3-mini, Endex gains 3x faster intelligence, enabling multi-step workflows like automating financial model reconciliation.

Endex identifies discrepancies in financial data, flagging restatements and inconsistencies with citations, freeing analysts time for decisions.

OpenAI’s o1 vision capabilities allow Endex to process investor presentations, internal decks, Excel models, and 8-Ks, enhancing analysis.

Endex automates detailed reports, reducing manual financial analysis, letting professionals focus on strategy instead of data formatting.

Why it’s important: Finance professionals require structured, referenceable reasoning, a challenge for non-reasoning LLMs. OpenAI's o-series models with long-context windows, and advanced reasoning capabilities achieves this.

SHOPIFY

Breakdown:

Shopify’s manual listing process led to incomplete attributes and miscategorization, reducing product visibility in search results.

The team built two AI agents: one to support listing creation and another to extract metadata from billions of product images and descriptions.

Proprietary models were costly. LLaVA, an open-source Llama-based vision model, offered competitive results with no per-token inference fees.

QLoRA enabled training on modest hardware. LMDeploy compressed models and optimized compute, slashing memory and inference costs.

The AI agents improved metadata quality, SEO, and discovery, helping shoppers find more relevant products through natural language queries.

MCKINSEY

The problem: A large bank needed to modernize its legacy core system, which consisted of 400 pieces of software—a massive undertaking budgeted at more than $600 million. Large teams of coders tackled the project using manual, repetitive tasks, which resulted in difficulty coordinating across silos. They also relied on often slow, error-prone documentation and coding. While first-generation gen AI tools helped accelerate individual tasks, progress remained slow and laborious.

The agentic approach: Human workers were elevated to supervisory roles, overseeing squads of AI agents, each contributing to a shared objective in a defined sequence (Exhibit 3). These squads retroactively document the legacy application, write new code, review the code of other agents, and integrate code into features that are later tested by other agents prior to delivery of the end product. Freed from repetitive, manual tasks, human supervisors guide each stage of the process, enhancing the quality of deliverables and reducing the number of sprints required to implement new features.

Impact: More than 50 percent reduction in time and effort in the early adopter teams

MCKINSEY

The problem: Relationship managers (RMs) at a retail bank were spending weeks writing and iterating credit-risk memos to help make credit decisions and fulfill regulatory requirements (Exhibit 4). This process required RMs to manually review and extract information from at least ten different data sources and develop complex nuanced reasoning across interdependent sections—for instance, loan, revenue, and cash joint evolution.

The agentic approach: In close collaboration with the bank’s credit-risk experts and RMs, a proof of concept was developed to transform the credit memo workflow using AI agents. The agents assist RMs by extracting data, drafting memo sections, generating confidence scores to prioritize review, and suggesting relevant follow-up questions. In this model, the analyst’s role shifts from manual drafting to strategic oversight and exception handling.

Impact: A potential 20 to 60 percent increase in productivity, including a 30 percent improvement in credit turnaround

MCKINSEY

Image source: McKinsey & Company

Brief: McKinsey’s case study reveals how it transformed work with its gen AI platform, Lilli. The objective was to build a platform powered by its proprietary knowledge to accelerate and improve insights for its teams and clients.

Breakdown:

Proof of Concept (March 2023, 1 week): A small team built a lean prototype in 1 week and secured investment approval.

Roadmap & Operating Model (April 2023, 2 weeks): Aligned on priority use cases based on value, impact, feasibility and requirements. Set up cross-functional agile squads for delivery.

Development Decisions (May 2023, 2 weeks): Guided by a five-point framework that evaluated cost, scalability, performance, security, and timing. Combined a hyperscaler’s prebuilt model with five of its own smaller expert models for enhanced answer relevance.

Build, Test & Iterate (May 2023, build: 5 weeks, test: 3 weeks): Alpha tested with 200 users, with rapid feedback improving response quality.

Firmwide Rollout (July 2023, 3 months): Gradual rollout over 3 months, available to all employees by October 2023.

The platform helps employees quickly learn new topics, access McKinsey frameworks, analyze data, develop presentations in McKinsey's style, create project plan drafts, and more.

72% of employees are active on the platform, saving up to 30% of time and processing over 500,000 prompts monthly.

Why it’s important: McKinsey's swift development of Lilli demonstrates how enterprises can leverage GenAI to accelerate knowledge work and enhance productivity. McKinsey's full case study also details lessons learned and further insights into the platform.

ACCENTURE

Image source: Accenture / MIT

Brief: Accenture, in partnership with MIT, developed a tool to help clients redesign their workforces for generative AI. By analyzing data on tasks, skills, and job transitions, the tool offers insights into AI's impact and effective reskilling strategies.

Breakdown:

While 97% of CxOs believe GenAI will transform their company, only 5% of organizations are actively reskilling their workforce at scale.

The tool enables clients to experiment with simulation models, exploring various scenarios and comparing outcomes for better decision-making.

Adjustable parameters include AI adoption propensity, investment rate, and AI innovation speed, capturing when and how companies invest in AI.

Users can set simulation duration and upload a CSV file for around 70 parameters, or input requirements via a LLM-enabled chat interface.

Simulation results highlight job changes, task shifts, and skills needed, highlighting the importance of reskilling while tracking revenue growth and headcount shifts.

A results summary is also presented through a multi-model approach, transforming visuals into critical insights. Check out the infographic and demo.

Why it’s important: As gen AI starts to disrupt industries, enterprises should understand its impact on workforce dynamics to remain competitive. Accenture’s tool helps make decisions on reskilling and productivity optimization, enabling businesses to adapt and integrate GenAI for improved performance and growth.

Image source: LinkedIn

Brief: LinkedIn detailed how it built its AI Hiring Assistant with EON (Economic Opportunity Network), a set of custom models that improve candidate-role matching accuracy and efficiency.

Breakdown:

EON's custom Meta Llama models were trained on 200M tokens from LinkedIn's Economic Graph, including member and company data.

Reinforcement Learning with Human Feedback (RLHF) and Direct Preference Optimization (DPO) techniques were used for safety alignment.

LinkedIn adapted foundation models like Llama and Mistral, evaluating them on open-source and LinkedIn benchmarks (see image above).

LinkedIn found EON-8B (based on Llama 3.1) to be 75x cheaper than GPT-4, 6x cheaper than GPT-4o, and 30% more accurate than Llama-3.

LinkedIn is now enhancing its EON models with planning and reasoning capabilities to enable more personalized, agentic interactions.

Why it’s important: To capture ROI, enterprises are increasingly exploring the cost and customization benefits of open-source models. LinkedIn's EON showcases how in-house gen AI innovation with domain-adapted foundation models can improve the recruiter-candidate experience while reducing costs.

MCKINSEY

Image source: McKinsey & Company / MIT

Brief: McKinsey traditionally relied on manual processes to curate and tag documents in its internal knowledge repository, achieving ~50% accuracy. A new generative AI tool now labels 26,000 documents annually, improving accuracy and efficiency.

Breakdown:

McKinsey’s manual tagging system took 20 seconds per document with ~50% accuracy.

The GenAI tool uses zero-shot classification with GPT, reducing classification time to 3.6 seconds per document and improving accuracy to 79.8%.

The new system saves up to 676 hours of manual work per analyst each year.

Annually, 26,000 documents are automatically labeled, which will enhance metadata for 140,000 weekly user queries in its colleague GenAI chatbot, Lilli.

The solution improves the search algorithm’s performance by improving search relevance and reducing errors.

Why it’s important: Automating document classification enhances efficiency and accuracy while improving McKinsey's knowledge management and responsiveness to client needs.

UBER

Image source: Uber

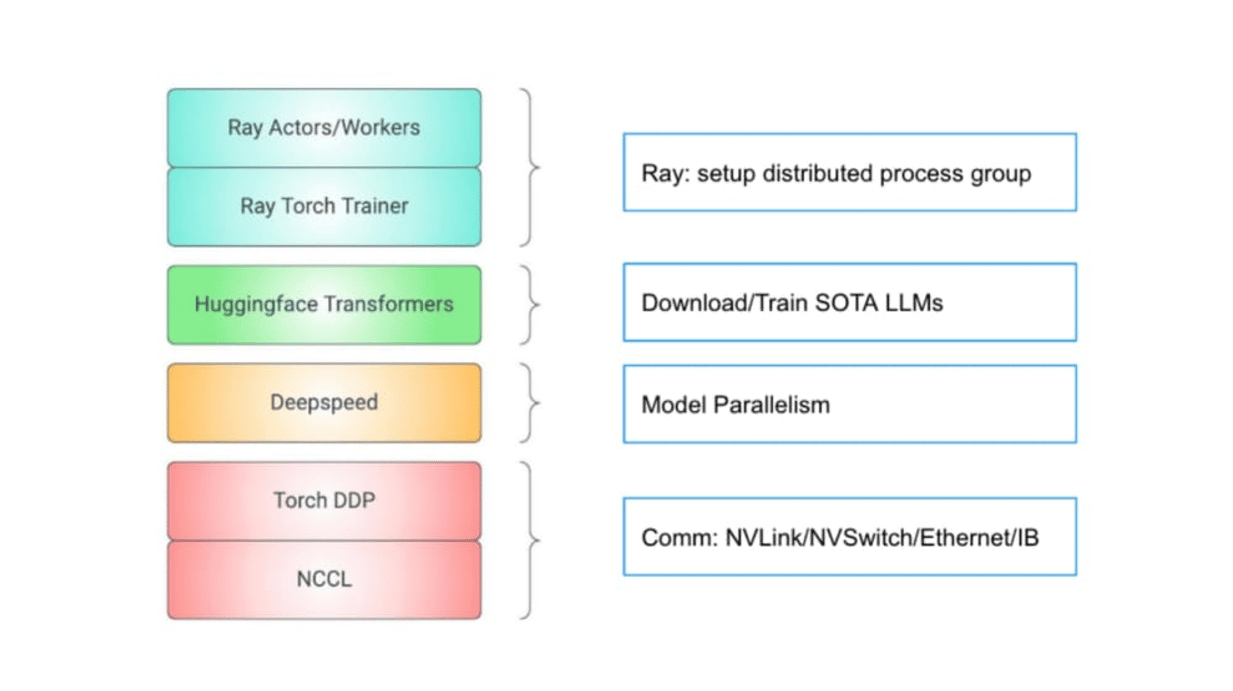

Brief: Uber's latest case study, featuring over 2,000 words and high-level architecture diagrams, explores how its in-house LLM training enhances flexibility, speed, and efficiency, using open-source models to help power generative AI-driven services.

Breakdown:

Uber leverages LLMs for Uber Eats recommendations, search, customer support chatbots, coding, and SQL query generation.

The case study details Uber’s infrastructure, training pipeline, and integration of open-source tools, libraries, and models to enhance LLM training.

Uber’s platform supports training the largest open source LLMs including full and parameter-efficient fine-tuning with LoRA and QLoRA.

Uber’s optimizations include CPU Offload and Flash Attention to achieve a 2-3x increase in throughput and a 50% reduction in memory usage.

Open-source models like Falcon, Llama, and Mixtral, along with tools like Hugging Face Transformers and DeepSpeed, enable Uber to adapt quickly.

Uber’s Ray and Kubernetes stack allows rapid integration of new open-source solutions, allowing for faster implementation.

Why it’s important: Uber demonstrates how open-source innovation, combined with robust infrastructure, can improve LLM training, offering a model to help other enterprises accelerate generative AI development and deployment.

AIRBNB

Image source: Airbnb

Brief: Airbnb's case study highlights the evolution of its Automation Platform, moving from Version 1 with static workflows for conversational systems to Version 2, which supports large language model (LLM) applications.

Breakdown:

The initial platform version supported traditional conversational AI products but faced challenges including limited flexibility and scalability issues.

Experiments showed that LLM-powered conversations provide a more natural and intelligent user experience than more rules-based workflows, enabling open-ended dialogues and better understanding of nuanced queries.

Despite benefits, LLM applications are still evolving for production, such as reducing latency and minimizing hallucinations. These limitations affect their suitability for some large-scale, high-stakes scenarios involving millions of Airbnb customers.

For sensitive processes like claims processing that need strict data validation, traditional workflows are considered more reliable than LLMs.

Airbnb combines LLMs with traditional workflows to leverage the strengths of both approaches and enhance overall performance.

The upgraded platform includes capabilities to facilitate LLM application development, featuring capabilities such as chain of thought, context management, guardrails and observability.

Why it’s important: Airbnb's Automation Platform evolution demonstrates the benefits of merging more traditional rules-based workflows with LLM technology to improve user experience and operational efficiency.

BOSTON CONSULTING GROUP

Image source: Boston Consulting Group

Brief: A 27-slide publication from BCG offers 42 examples and nine deep drives on how leading enterprises (those scaling AI, per BCG's Build for the Future 2024 Global Study) are generating value with AI, including GenAI.

Breakdown:

The publication showcases 42 examples with measurable outcomes across nine functions: sales, customer service, pricing & revenue management, marketing, manufacturing, field forces, R&D, technology, and business operations.

For instance, a biopharma company used AI in R&D to accelerate drug discovery, achieving a 25% cycle time reduction, $25M in cost savings, and $50M–$150M in revenue uplift.

Nine detailed case studies showcase AI (including GenAI) transformation and impact across functions, such as a GenAI Co-pilot for relationship managers at a universal bank (sales).

Additional deep dives highlight AI for BPO call agents (customer service), GenAI for data governance at a payments provider (technology), AI transforming credit processing at a European bank (business operations), and more.

It explores how AI leaders (26% of enterprises successfully scaling AI value) reshape functions rather than merely deploying or inventing, along with other success factors.

Why it’s important: This publication articulates the transformative value of AI across a breadth of functions, offering deep dive examples and measurable outcomes, all presented in a clear, easily digestible format.

BMW

Image source: Amazon Web Services

Brief: This case study outlines how BMW Group, with BCG and AWS, implemented its 'Offer Analyst' GenAI application to improve procurement efficiency and accuracy by automating offer reviews and comparisons.

Breakdown:

BMW Group's traditional procurement process involved three main steps: document collection, review and preselection, and offer selection, which include challenges like manual effort, risk of errors, and less meaningful work.

The 'Offer Analyst' GenAI application is designed to help enhance the offer evaluation process, with a user-friendly interface and tailored to the needs of procurement experts.

The enhanced process includes RfP document uploads, offer uploads, information extraction, initial analysis (standard criteria) and tailored analysis (ad hoc criteria), download analysis, and interactive analysis (chat with your offer).

Key solution architecture components of the 'Offer Analyst' include frontend/UI, document storage, integration layer, GenAI layer, API layer, and security features, all built on a serverless AWS architecture for scalability and resilience.

BMW benefits from reduced manual proofreading time, improved decision-making, reduced errors through automated compliance checks, and increased employee satisfaction by enabling more engaging work.

Why it’s important: GenAI applications like 'Offer Analyst' are improving procurement, leading to greater operational efficiency, enhanced employee satisfaction, and a more effective procurement process.

UBER

Image source: Uber

Brief: Uber's case study explores its centralized Toolkit for building, managing, executing, and evaluating prompts across models. It details the prompt engineering lifecycle, architecture, evaluation, and production use cases.

Breakdown:

Uber’s Model Catalog offers descriptions, metrics, and usage guides for models, while the GenAI Playground allows users to test LLM capabilities.

The Prompt Builder automates prompt creation and helps users discover prompting techniques tailored to their specific use cases.

Prompts can be evaluated against datasets using LLM-based or custom code evaluators.

The architecture features a Prompt Template UI/SDK for managing templates and revisions, integrated with APIs like GetAPI and ExecuteAPI to interact with models.

Models and prompts are stored in ETCD and UCS, driving the Offline Generation and Prompt Evaluation Pipelines.

Prompt templates are reviewed before revisions, deployed with tags, and managed via ObjectConfig, Uber’s internal configuration system, for production deployment.

Why it’s important: Uber's toolkit enhances prompt consistency, reusability, and scalability, improving model performance while safeguarding production environments.

ADDITIONAL CASE STUDIES

Anthropic shared how Zapier leveraged Claude Enterprise to drive company-wide AI adoption with 800+ AI agents deployed.

Microsoft shared how EY is leveraging Copilot and agents to improve productivity and early plans to begin orchestrating entire agentic teams.

AWS and BCG combined gen AI sales nudges and proprietary algorithms to improve AWS Partner outreach, driving a 65% regional sales pipeline uplift.

Rakuten achieved 7 hours of autonomous coding and cut time-to-market by 79% using Claude Code for complex refactoring and feature delivery.

Cognizant published 14-pages on how multi-agent AI is transforming global exhibition experiences, including details of its AI accelerator.

LangChain shared case studies on how LinkedIn, BlackRock, and Uber built AI agents to improve hiring, asset management, and developer productivity.

OpenAI shared a case study on AI agents automating cybersecurity detection and response.

Capgemini - Proposal AI agent

BMW - Supplier AI agent

Meta - Consulting AI agent

Google - Automotive AI agent

Moody’s - Risk Analysis AI agent

NTT Data - Change Management Agent

Meta - Customer Support Agent

Uber - Agentic RAG

Pfizer - Accelerating Drug Dev

Comcast - Real-Time Call Response

Takeda - Faster Clinical Trials

Uber - Journey to Generative AI

Pinterest - Building Text-to-SQL

Grab - Classifying Data with Gen AI

L’Oreal - Launching GenAI as a Service in 3 Months

Amazon - Transforming Java Upgrades with Gen AI

Discord - Developing Rapidly with Gen AI

Vimeo - Building Video Q&A with RAG

MORE MUST-READ BREAKDOWNS

AI Agent Playbooks (16 playbooks)

AI Agent, Agentic AI Use Cases (2195)

Agentic AI Case Studies (19 cases)

AI Strategy Playbooks (16 playbooks)

Playbooks for AI Leaders (16 playbooks)

LEVEL UP WITH GENERATIVE AI ENTERPRISE

Generative AI is evolving rapidly in the enterprise, driving a new era of transformation through agentic applications.

Twice a week, we review hundreds of the latest generative and agentic AI best practices, case studies, market dynamics and innovation insights to bring you the top 1%...

Explore sample editions:

Found this valuable? Share with a colleague.

Received this email from someone else? Sign up here.

Connect on LinkedIn.